Cleveland Legal Aid's Use of Outcomes

The Cleveland Legal Aid Society uses outcomes measures to more effectively and efficiently meet clients' needs. Key components of its outcome measures system are highlighted in the following sections:

- Why Cleveland Uses Outcomes Measures

- How Cleveland Developed Its Outcomes Data System

- Major Outcomes Categories and Metrics Cleveland Uses

- Systems Cleveland Uses to Collect, Compile, and Analyze Outcomes Data

- Examples of the Ways Cleveland Uses Outcomes Measures

- Cleveland’s Lessons Learned About Using Outcomes Data

Why Cleveland Uses Outcomes Measures

Prior to 2007, the data Cleveland collected was limited to outputs data such as LSC Case Services Reporting (CSR) data, LSC other services (matters) data, and similar information required by specific funders. Very few funders—most notably United Way—required outcomes data at the time. Cleveland did collect some outcome data for its own purposes, but their value was limited because they were not compiled in a consistent fashion and were not the most significant outcomes of the program’s client services.

In 2007, Cleveland's management determined that the data they were collecting was inadequate because it did not allow the program to meaningfully analyze and assess the results of its work. CSR case closing data and similar output information documented the volume and levels of the program’s work in different substantive law areas or community engagement and partnerships, but the program could not show the ways and extent to which the program’s work benefited the client community.

These limitations led the program to develop a more comprehensive outcomes measures system. The program did not replace CSR-type data and other outputs and descriptive data with outcomes data. Instead, it uses the combination of this data.

Cleveland uses outcomes data to better accomplish four fundamental, interrelated objectives.

Objective 1: Assess the program’s success in achieving the program’s mission and strategic goals

Cleveland’s core mission is to secure justice and resolve fundamental problems for low-income and vulnerable people by providing high-quality legal services and working for systemic solutions.

Its strategic goals—the desired end results—for client services are to:

- Improve safety and health

- Promote education and financial stability

- Secure decent, affordable housing

- Ensure that the justice system and government entities are accountable and accessible

Cleveland determined that data about the results of its work—outcomes data—were essential to effectively assess whether and to what extent it was accomplishing its mission and goals. And without outcomes data, the program could not determine how well its work was aligned with its mission and goals, nor could it identify how its work might need to be changed to ensure it was most effectively focused on the mission and goals.

Objective 2: Allocate resources and developing strategies

Cleveland also found that the outcomes data it collects allows the program to gain a more complete understanding of the results and impact of its work, which in turn enables the program to more efficiently allocate its limited resources. For example, the program needed outcomes data to assess whether its allocation of resources among different substantive law areas or among different regions within the service area were most appropriate. Similarly, outcomes data were essential to assess the effectiveness of advocacy strategies and to develop the strategies that would provide the greatest benefits to the client community.

Objective 3: Fundraising and “telling our story”

The program determined that CSR data and other outputs data did not demonstrate the value of its work or convince other potential funders that supporting civil legal aid is a good investment, especially when the program was competing with many other social services organizations for limited resources. In fact, some funders had a “So what?” reaction to proposals with deliverables that were limited to CSR or similar outputs data. Funders wanted to know what impact their investment would make in clients’ lives.

The use of CSR and other outputs data had similar limitations when the program tried to tell its story to the local media or community partners. Anecdotes about the ways the program helped individual clients could be very compelling, but the program needed more comprehensive data to effectively demonstrate that the benefits for individual clients were examples of the broad benefits it provided the community.

Objective 4: Improve organizational performance and staff engagement

Cleveland staffers were skeptical when the program’s leadership first began discussing outcome measures. They expressed concerns that the use of outcomes would divert resources from client services to bureaucratic record keeping, drive the program to “cherry-pick” cases that were easy, have limited benefits, or be used as an inappropriate staff evaluation tool.

Program management successfully addressed these concerns in the development and implementation of the system. (The outcomes data is not used to evaluate individual employees’ performance.)

Staff have come to embrace and value the outcome measures system.

- Staff were integrally involved in the system’s development and its ongoing improvements.

- They have found the data collection process to be efficient and easy to use.

- Advocates consider the data essential for ensuring the efficient allocation of resources and for developing and improving advocacy strategies.

- By demonstrating the impact and value of their work, the system has strengthened morale.

- The system’s benefits have fostered increased staff engagement in program planning.

- The outcome system data have enabled staff to identify ways to enhance the impact and effectiveness of their work.

How Cleveland Developed Its Outcomes Measures System

Cleveland spent about a year developing its outcomes measures system. It had to develop the system’s substantive and technical components and ensure that these components were effectively integrated. The program recognized that the staff had to be integrally involved in the development process. The board was also interested in the program developing more meaningful measurements of achievement, and supported the development of the new system.

The development process was not as linear as the description below implies. Instead, it was an ongoing iterative process in which proposals would be developed, analyzed by staff stakeholders, and then revised.

Outcomes are collected only for extended services cases.

Phase 1: Articulate the vision and engage staff

The program’s executive director and deputy director led and coordinated the effort. The first phase in the process was for management to articulate to the staff its vision for the system as clearly and as frequently as possible. Management focused on how the system would enhance the program’s effectiveness in serving clients as well as how the system would help staff identify and improve their own work. Staff expressed their views about the potential system’s benefits and pitfalls and also made suggestions about how the system could be most effectively developed and implemented. The concerns and ideas of all program staff were essential to the successful development and implementation of the system.

Phase 2: Identify substantive outcomes

After management set out its vision and actively solicited feedback and input from the staff, it turned to members of the program’s intake unit and four substantive law practice groups:

- Consumer, including Foreclosure (LSC CSR problem codes 01-09)

- Housing, excluding Foreclosure (problem codes 60-69)

- Family (problem code 30-39), and

- Health, Education, Work, Income, and Immigration (problem codes 11-19, 21-29, 41-49, 51-59, 71-79, and 81-89)

The practice groups were tasked with developing the substantive elements of the system. Specifically, the group was charged with identifying the outcome measures and indicators they thought would enable them to best assess and demonstrate the benefits of their work to clients.

The practice groups developed their outcome measures using the same framework. Outcomes needed to be defined from the client’s perspective. The key questions they asked were:

- Why are we doing this work?

- What do we want to accomplish in each substantive law area?

- What data would we need (and can obtain) that would enable us to know what we were accomplishing?

- What do we need to report to funders?

In addition, the outcomes needed to be based on the LSC CSR substantive issue categories (“problem codes”)—e.g., Consumer/Finance, Family, Health, and Housing. Outcome questions are specifically associated with each of these groups, so that the number of questions required for any case is only a small subset of the entire list of questions.

A major challenge was developing a list of outcomes (and associated indicators) that would not be too long and detailed, but at the same time would provide enough information to effectively assess and demonstrate the impact of the advocates’ work. The outcome measures the groups ultimately developed are identified in the "Major outcomes categories and metrics" section below.

This process took about six months.

Phase 3: Develop the technical requirements

The third phase of the process involved developing the technical requirements for collecting the outcomes measures and indicators. The program’s technology staff led this effort. They had to develop the functionalities in the case management system (CMS) and other data systems that would: (a) enable advocates to easily and efficiently input the results of their casework; (b) provide for effective data compilation and analysis; and (c) produce the reports the program needed to assess and show the impact of its work to different audiences. And, as indicated above, these functionalities needed to be based on and integrated with the LSC CSR problem codes.

The program’s CMS lacked the necessary functionalities. Therefore, Cleveland staff worked with staff of the Ohio Legal Assistance Foundation to develop these functionalities. These are discussed in the "Systems to collect, compile and analyze outcomes data" section below.

This process took about four months.

Phase 4: Staff training

The fourth phase of the process was to train staff on how to use the outcome measures and the CMS. All staff using the system were trained. The training was coordinated by management, IT staff, and the practice group leaders. Rather than formal training, small group conversations were conducted with members of different practice groups. After an overview of the system functions and content, IT staff and managers engaged with staff to respond to questions, provide necessary guidance on specific issues, identify areas for additional system improvements and further assistance, etc. An initial training period of about two months was followed by an ongoing process of assessment and adjustment of the system to enhance its effectiveness.

This process took about two months.

Major Outcomes Categories and Metrics

This section identifies the major outcomes categories Cleveland uses as well as the types of indicators and methods on which these measures are based. The outcomes correspond to three of Cleveland’s strategic goals:

- Improving safety and health

- Promoting education and economic stability

- Securing decent, affordable housing

Cleveland is able to track their achievements related to these goals by aggregating (combining) the outcomes data from different substantive law case types. See Cleveland's Strategic Goal Outcomes for the list of outcomes that are combined to calculate the total outcomes for each of these goals.

There are two basic outcome categories:

Category 1: Outcomes that describe the result of the case or matter

These outcomes are associated with a particular CSR problem codes (e.g., Consumer/Finance, Housing, Family). For example, the outcomes for the Consumer/Finance area include, but are not limited to:

- “Obtained monetary claim”

- “Reduced/avoided debt”

- “Increased assets"

- “Obtained/restored utilities"

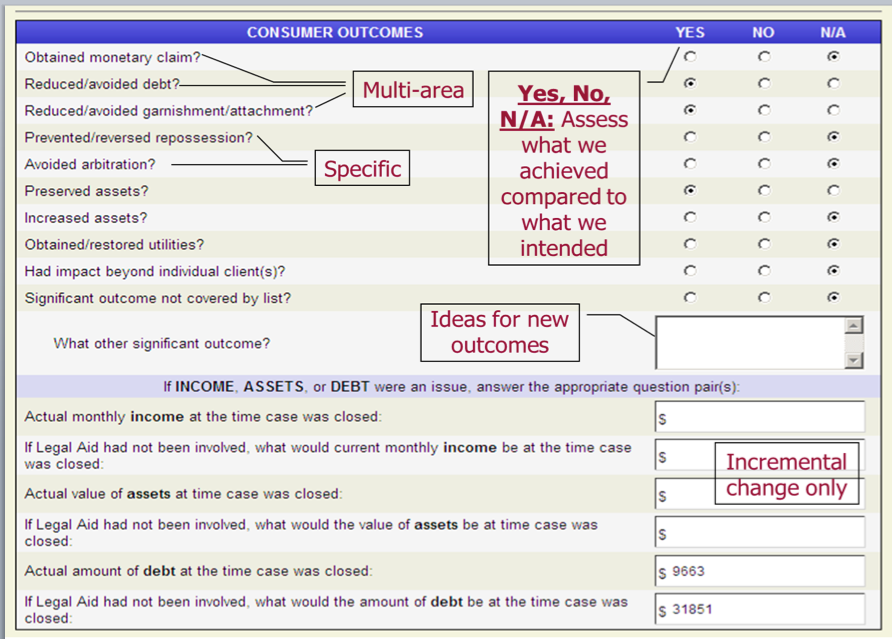

Each of these potential outcomes require the advocate to indicate "yes," "no," or "not applicable." That way, Cleveland can track the percentage of success for each outcome indicator. Requiring responses to every question also helps ensure data integrity. Cleveland Legal Aid does not have to wonder whether the advocate only picked the first outcomes on the list and did not record the most important or all of the outcomes achieved.

View the complete lists of outcomes collected for each of the substantive law areas.

Category 2: Financial outcomes secured for the client (where relevant)

These include data regarding changes in the amounts of a client’s monthly income, assets, or debts that can be attributed to Cleveland’s work. The two basic questions for including and calculating financial data are: “If Legal Aid had not been involved, what would the client's [value/amount of income/asset/debt] be at the time the case was closed?” and “What was the client's [value/amount of income/asset/debt] at the time the case was closed?”

Here is the form an advocate completes at case closing that shows the financial and non-financial outcomes for a consumer case:

Systems Cleveland Uses to Compile, Analyze and Report Outcomes Data

Cleveland LAS expanded the functionalities of its case management system (CMS) in order to compile, analyze, and report outcomes data effectively and efficiently.

In-house staff and staff of Ohio Legal Assistance Foundation (OLAF) made the necessary CMS changes. The original development, testing, and refinements of the system took about four months. Given the programming and other technology tools developed since then, this process would be much easier and quicker today. (A skilled programmer could probably complete the requisite tasks in about two weeks.)

Functionalities that were added to the CMS include:

- The ability for case handlers to record the appropriate outcomes data for closed cases. On the front end, case handlers would see the questions to which they needed to respond to input the data when they closed the case. Here is a screenshot with the questions for Consumer cases.

- Contain these answers in fields related to other CMS fields so that the outcomes can be linked to type of case, level of service, and other key fields.

- Develop the report formats that are most useful for staff, board, management and funders.

- Develop the Crystal Reports required to create those reports.

- Develop the Excel spreadsheets required to present the data in charts and graphs.

Cleveland uses Crystal Reports to compile data and generate a variety of reports with outcomes data. A sample of these reports are highlighted below.

Some reports are automatically generated and routed to appropriate staff (e.g., executive director, practice group managers) on a monthly basis. The data in these reports can also be readily compiled for different time periods (e.g., quarterly, annually). Examples of these reports include:

- Reports that provide outcomes data for each of the LSC CSR problem code areas as well as financial outcomes data for the entire program. Here is the report with annual data for 2013.

- Three-page reports that provide the same type of information for each of the program’s four substantive law practice groups and the key outcome data for each practice group. These include data regarding case volume and case staffing (page 1), client data (page 2) and outcomes data (page 3). See an example of this report (with annual data) for the housing group. Here is the same report, combining the data for all of the practice groups.

Other reports combine different outcomes and other data.

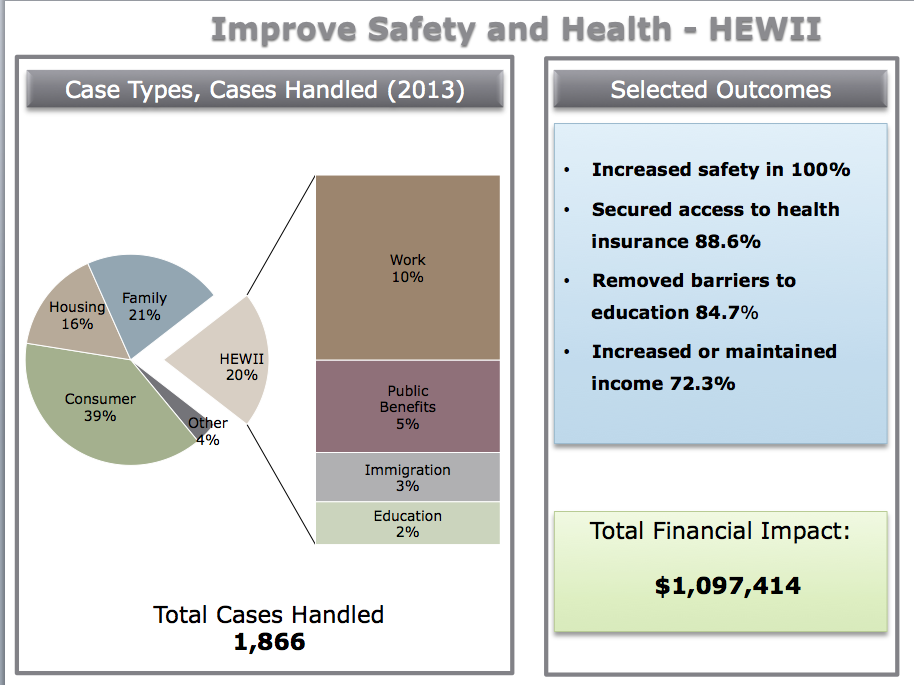

- Reports with outcomes and other data corresponding to the program’s strategic goals. Here is a report that highlights data regarding the Health, Education, Work, Income, and Immigration (HEWII) practice group:

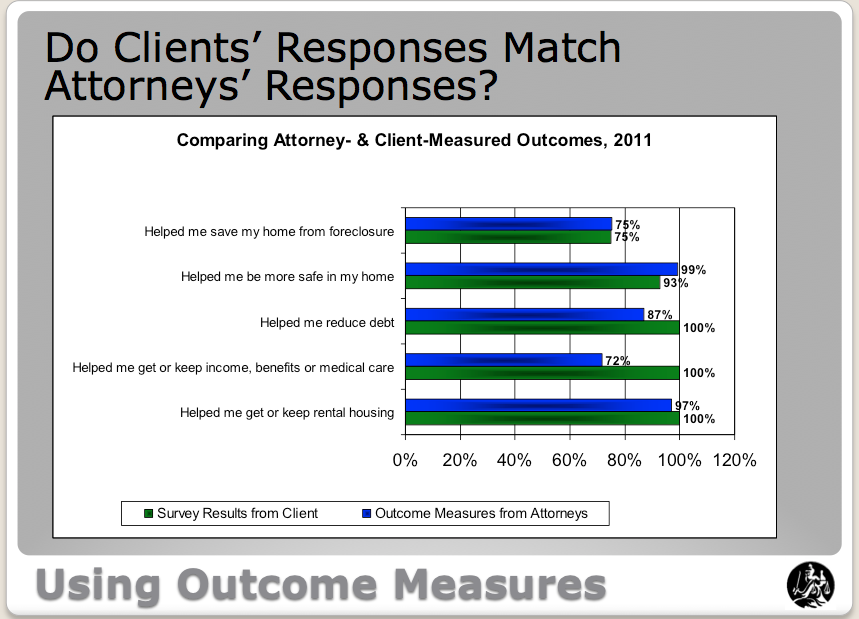

- Reports comparing clients’ and advocates' assessments of case results:

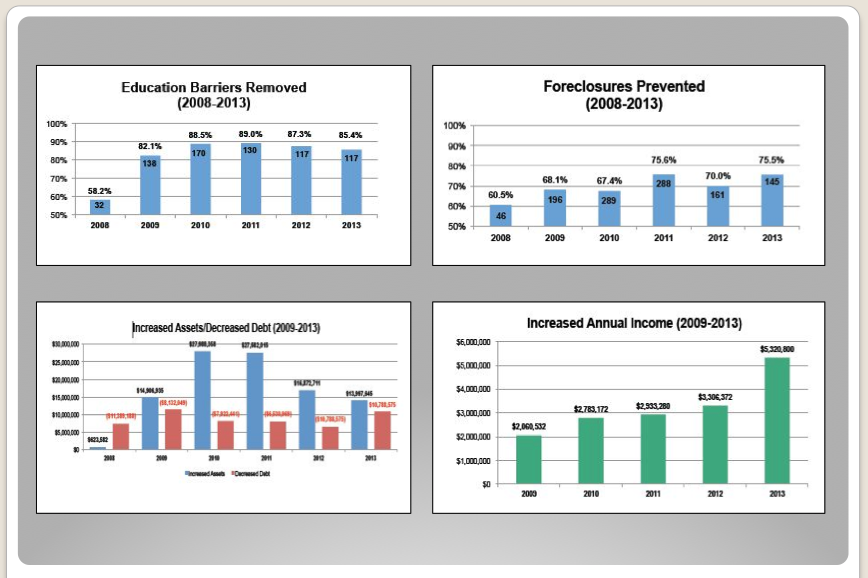

- Reports with historical trend data showing the success rates for different case types and the amount of financial benefits provided clients:

- Funder reports that combine CSR-type data, outcomes data, and quantitative and descriptive data about the program’s outreach and other community work. See an example: Cleveland Evidence of Success-St. Lukes.

Examples of the Ways Cleveland Uses Outcomes Measures to Improve Its Services

There are many ways that Cleveland uses its outcome measures to enhance its organizational performance and client services.

- Resource allocation. At the height of the foreclosure crisis, case outcomes data showed that a percentage of clients were unable to keep their homes. Many clients would inevitably lose their home because of their inadequate resources. Data analysis indicated that unless a client’s income was at least 75 percent of the poverty line, they would likely lose their home. Therefore, the program decided to stop taking foreclosure cases where the client’s income was less than 75 percent of the poverty line. Instead, the program concentrated its resources on helping those clients who would be able to keep their homes if they had the program’s assistance. Cleveland LAS could not help all of the clients with incomes above 75 percent of the poverty line who needed the program’s help. However, a high percentage of clients—about 70 percent—the program did help were able to keep their homes.

- Resource development. The use of outcomes data significantly increased Cleveland’s fundraising success. By using outcome data in their grant proposals and reports, Cleveland was able to translate the impact of its work into a language that funders understand. Most funders do not fund lawyers or access to justice. They fund programs that, for example, provide housing, stabilize families, or increase access to healthcare. By combining outcomes data with CSR-type data and information regarding the program’s work with community groups, Cleveland was able to concretely demonstrate the impact legal advice and counsel could have on achieving those outcomes. View an example of a funder report that incorporates all of these data types: Cleveland's Evidence of Success-St. Lukes.

- Staff morale. Cleveland's leadership did not initiate the use of outcomes to improve staff morale. However, that has been a very important and valuable unintended consequence. The outcomes data profiled in the various reports generated by the program demonstrate to the staff the value and benefits of their work to clients.

Cleveland’s Lessons Learned About Using Outcomes Data

Since it began using its outcomes system, Cleveland has learned a range of lessons about how outcome measures can be most useful. The most significant are discussed below.

- Many important aspects of the program’s work cannot be quantified. The program’s management and staff conclude that critical aspects of its work cannot be meaningfully quantified. These include the quality of an advocate’s casework, the impact of the program’s work on a client’s sense of well-being (e.g., from avoiding homelessness, escaping from an abusive partner) or empowerment, the impact of the program’s work with community organizations, and if and how its work increases the accountability and accessibility of the justice system and government entities to the low-income population.

- Every measure is imperfect. The program recognizes that the outcome measures it uses reflect assumptions and practices that can limit their validity and value. Questions include: Are they measuring the right things? Are the definitions of success appropriate? Are the measures based on the appropriate indicators? With regard to the financial outcomes, is the assessment about what the clients’ finances would have been without the program’s intervention valid? Is the method valid that is used to combine (“roll-up”) various outcomes to determine success in achieving strategic goals? Are the advocates accurately reporting the information case outcomes? The program addresses these issues by recognizing and trying to make refinements to overcome these limitations and being transparent about them.

- Consistency is important. Because there may be different legitimate answers to each outcome question, it is important that staff members use consistent definitions and assessments in responding.

- Outcome measures are necessary, but not the only tool to assess effectiveness. Outcomes provide valuable insights about the impact and value of Cleveland’s work. However, outcomes must be used in combination with a wide range of other assessment tools and datasets to effectively analyze and maximize the effectiveness of program operations and benefits for clients. These include CSR data, Census data and data from other federal and state agencies about the client’s population characteristics (e.g. geographic location, economic and demographic data, education and employment status), ongoing engagement with client and community groups, surveys and interviews with staff and community members, and GPS or similar data to align services with need.

- Ongoing evaluation and improvement of the system is essential. The program’s management and staff engage in a continuous assessment of the outcomes system to identify ways it is—and is not—working. A key aspect of this process is to ensure that the system does not distort the program’s work, such as by driving it to “cherry-pick” cases that can be easily measured and result in high success rates.